NVIDIA A100 PCIe 80 GB - presentation of the most powerful accelerator and discussion of the GPU Direct Storage technique

Just one day ago, we wrote about the NVIDIA A100 graphics accelerator with a PCIe connector, which may appear in a version with 80 GB of HBM2e memory with extremely high bandwidth. The weekend is barely over, and the manufacturer has unveiled the next version of its flagship GPU for advanced HPC computing and artificial intelligence. The manufacturer boasted most of the technical specifications. The Ampere GA100 chip itself will be identical to the previous variants. In addition, NVIDIA revealed the first details about the GPUDirect Storage technique, the principle of which will be similar to Microsoft's DirectMI Storage, which will debut with the Windows 11 operating system.

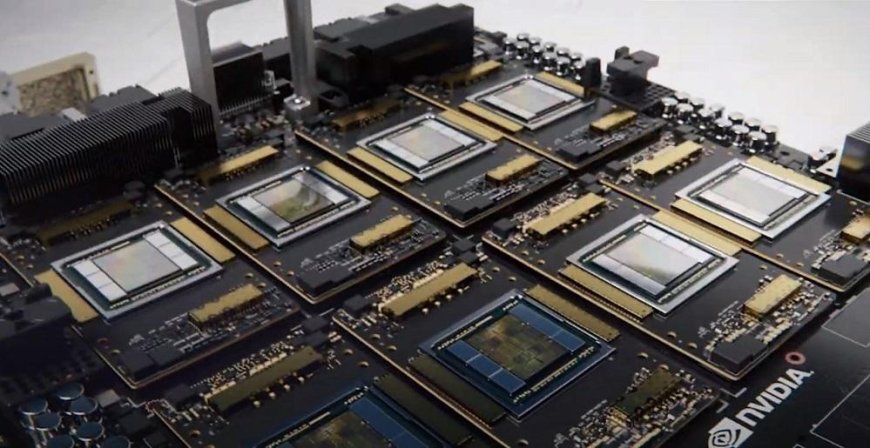

NVIDIA presented the A100 graphics accelerator with a PCIe 4.0 connector and 80 GB of HBM2e memory from Samsung with a bandwidth of 2039 GB / s. In addition, the company showed the GPUDirect Storage technique, with capabilities similar to Microsoft DirectMI Storage.

Must Read: Asus ROG Flow X13 for mobile work and stationary gaming

The NVIDIA A100 PCIe chip is based on TSMC's 7nm process technology. The Ampere GA100 graphics core itself also has a much larger surface area of ‹‹826 mm², which makes it a gigantic chip. Ampere GA100 includes, among others 54 billion transistors. The NVIDIA A100 variant with a PCIe connector and 80 GB of memory, presented at the ISC High Performance 2021 conference, has exactly the same specifications for the GA100 graphics chip. The most important difference is the use of faster HBM2e memory from Samsung, with an effective clock speed of 3186 Gbps. With a total capacity of 80 GB, this gives an impressive bandwidth of 2039 GB / s. This is 484 GB / s more compared to the previous variants of the A100 accelerator (SXM4 / PCIe).

During the ISC High Performance 2021 conference, NVIDIA also confirmed the end of the beta period for GPUDirect Storage, which operates on a similar principle to Microsoft DirectMI Storage. The purpose of the software is to significantly accelerate access to the mass memory - NVMe - directly through the graphics chip. Thus, the CPU usage for this task is reduced by as much as 3 times. GPU Direct Storage activates Direct Memory Access (DMA) between the graphics memory and the data store (fast media with the NVMe protocol). However, while Microsoft proposes it as a consumer solution, NVIDIA, at least for now, focuses on using GPUDirect Storage for its most powerful A100 accelerators, including the one equipped with 80 GB of HBM2e memory.